GTP-Neo

From the maker of the GTP-Neo,

EleutherAI

“GPT-Neo is the code name for a family of transformer-based language models loosely styled around the GPT architecture. Our primary goal is to train an equivalent model to the full-sized GPT-3 and make it available to the public under an open license.”

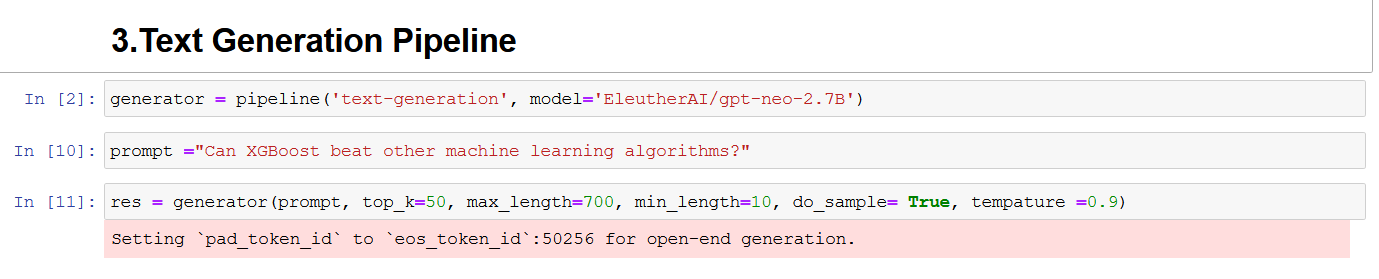

Text-generation with GTP-neo

text generation from a small heading text is one of the feature of GPT-Neo. here we are showing a text or blog post that generated by GTP-Neo while we have asked “Can XGBoost beat other machine learning algorithms?”

And then it wrote the following:

XGBoost is one of the most popular machine learning algorithms out there. It is often used by large organizations and universities for the purpose of predictive analytics and is a powerful tool.

So, what is so special about it, that makes it stand out from the rest of the crowd? In this blog post, I want to discuss some of the shortcomings of XGBoost and how it can be improved. This includes explaining what kind of problem it solves, what type of predictive models it can produce, and its limitations and potential.

The shortcomings of the algorithm

XGBoost is an algorithm that tries to optimize a predictive model based on its predictors. Like many other machine learning algorithms, XGBoost is capable to generalize well, if the input data is well-suited to the model. However, there are some shortcomings that XGBoost might not have been able to tackle.

What is the main difference between XGBoost and other machine learning methods?

XGBoost has a few clear advantages over most other methods. Some of the most obvious advantages are as follows:

A: Less complex: It is easy to train model with xgboost.

B: High accuracy: The algorithm is capable to generalize well.

XGBoost is not the only machine learning algorithm. There are several other algorithms which can be used to solve the same problem. For example, XGBoost is based on a regression problem. These methods do not only solve regression problems but also perform other tasks such as classification and even can work as the classifier itself. The comparison between these different machine learning algorithms is fairly complicated. However, I can compare the two methods using a simple example:

The aim is to predict the price of Apple stock.

The problem is a regression problem. The XGBoost algorithm uses the price of Apple stock as input and produces the price of Apple stock based on the past prices.

If I were to use a classic regression algorithm (for example, lasso), then the input would be the price of Apple stock and the output would be the price of Apple stock. This does not make too much sense. The output is not the price but the price that this algorithm predicts with the input.

For most other algorithms, the input is the price of Apple stock and the output is the price predicted using the price of Apple stock. This makes more sense: the algorithm predicts which price of Apple stock is the closest to the actual price.

How it solves the problem

XGBoost solves the problem by doing a regression. However, what it does not take into account is your data. Basically, XGBoost tries to predict the price of Apple stock based on the price of Apple stock and the past history of price.

However, in many cases, this history is much more important than the price itself. This history can contain the following information (taken from Wikipedia):

Past history of Apple stock price. How many times does Apple stock price go up and down? How much has it performed over time? Has Apple stock price grown or shrunk?

To answer the question, you should use previous data and try to predict it using XGBoost.

How XGBoost could

The text written by GPT-Neo has sense. So we think the future of GPT-Neo with be a life changer in the area of Natural language processing(NLP).

For more info about the GPT-Neo,

https://www.eleuther.ai/projects/gpt-neo/

https://huggingface.co/EleutherAI

https://github.com/EleutherAI/gpt-neo