Introduction

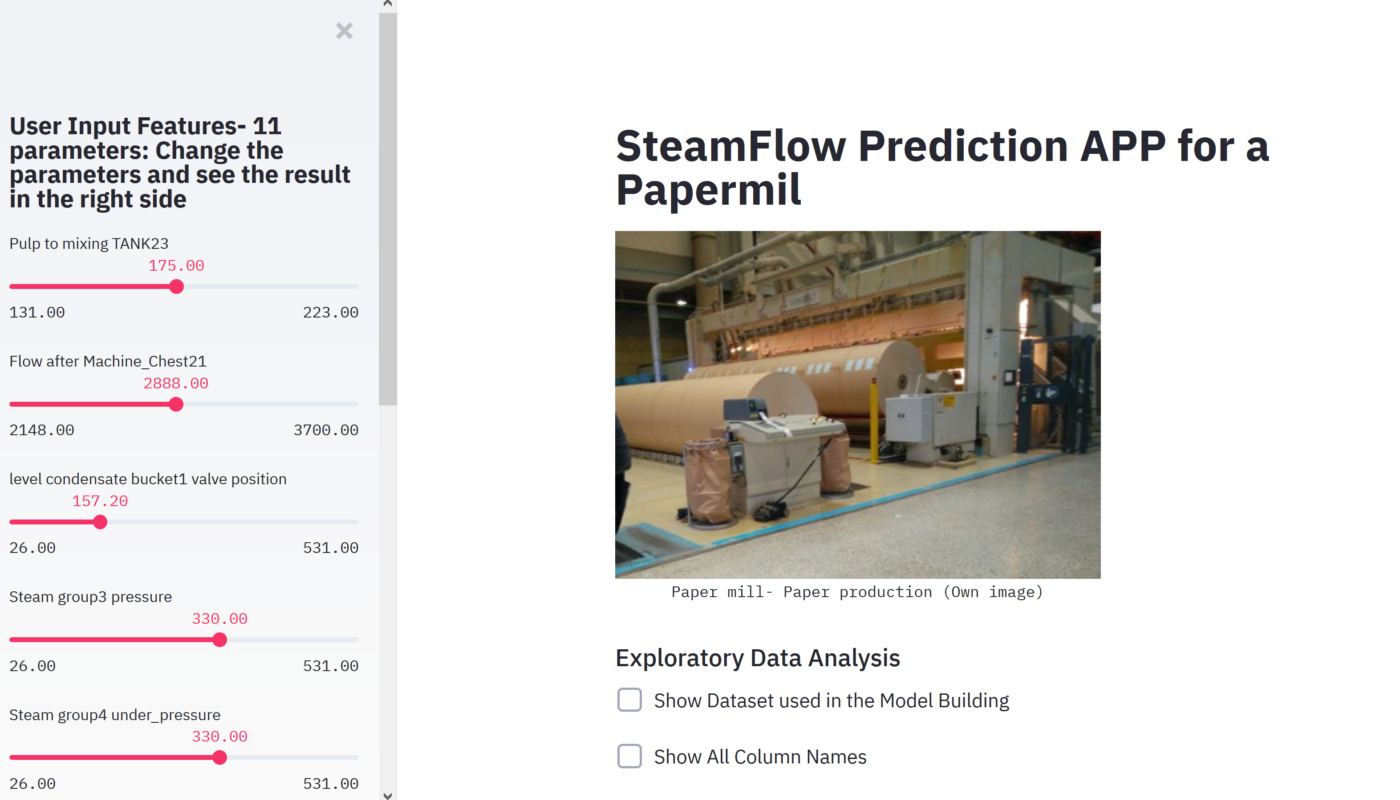

Pulp and paper is one the biggest of the process industries of Sweden. Making paper roll requires water, usually a roll of paper may need 16-22 tons of water per hour. Digitization of process industry like pulp and paper can take months and years. We try to digitize one part of the paper mill by predicting the water flow of a paper machine to make a paper roll using machine learning methods. We have collected the data of a paper machine and we will use few different machine learning algorithm and apply the best which has less error, to see the output prediction and try to show the explainability of the model.

Pulp and paper is one the biggest of the process industries of Sweden. Making paper roll requires water, usually a roll of paper may need 16-22 tons of water per hour. Digitization of process industry like pulp and paper can take months and years. We try to digitize one part of the paper mill by predicting the water flow of a paper machine to make a paper roll using machine learning methods. We have collected the data of a paper machine and we will use few different machine learning algorithm and apply the best which has less error, to see the output prediction and try to show the explainability of the model.

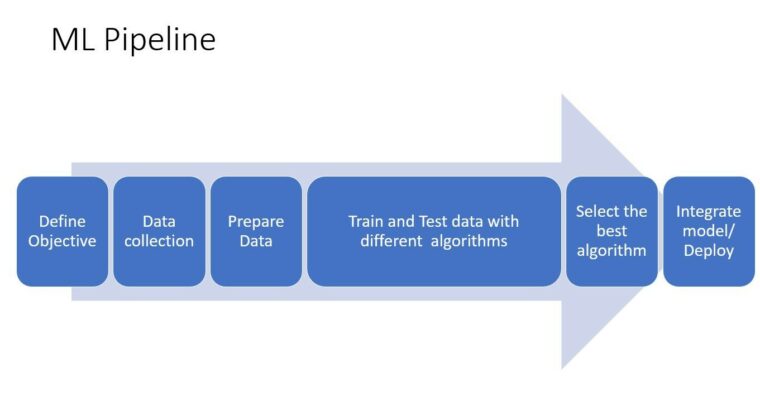

The machine learning pipeline

To predict the steam flow or any kind of prediction process we need to go through the machine learning pipeline. Machine learning pipeline is nothing else but a process of implementing machine learning or predictive analytics process, starting from data gathering, data preprocessing, training and testing and deployment.

Training and testing data

Training and testing dataset are very vital in machine learning pipeline in any project. We divide the whole dataset into two parts training and testing, in most case in 80/20 ratio. We take the training data and train the model with one or more machine learning algorithms, after the model is created we test the model with the testing dataset and find the error rate that our model did. Here the less the error, better the model is.

There are many methods of doing training and testing, k-fold cross validation (where k is a integer) is one of the most used and effective among them and also very useful when we have less data.

There are many ML framework or tools and many different algorithms. We will be testing few algorithms with 2 different tools. One of them is jupyter notebook with Anaconda using python 3 programming language and another one is Orange 3, a data mining tool.

In Jupyter Notebook

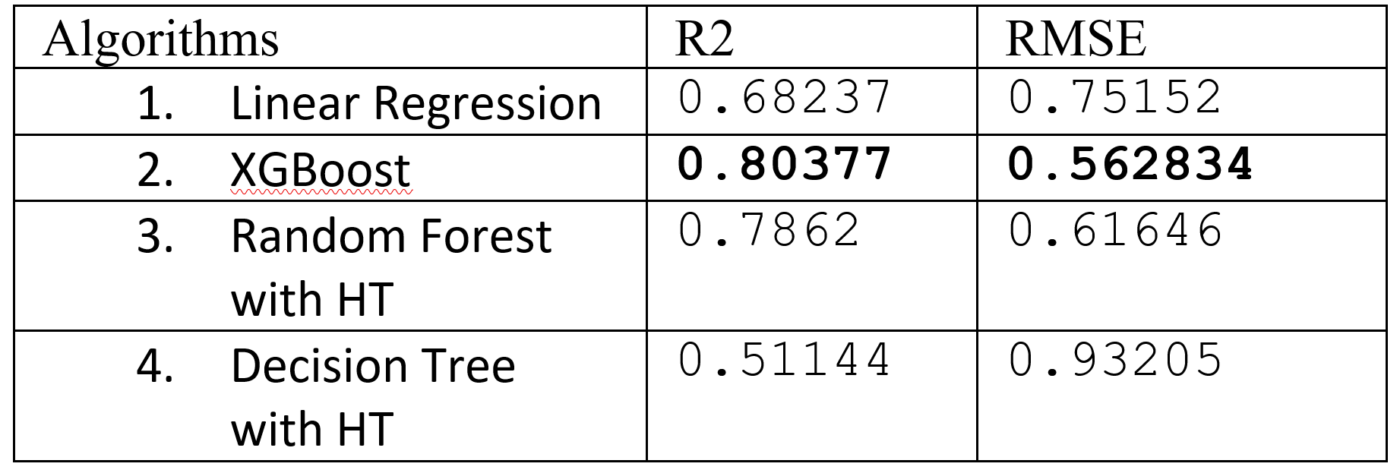

In jupyter notebook, we have used 4 different algorithms, Linear regression, XGBoost(eXtreme Gradient Boosting), Random Forest and Decision trees with hyperparameter tuning. We try to find the R2 (Coefficoient of determination) meaning, how well the model fits on to the dataset and RMSE

(root mean squared error) is the standard deviation of the residuals (prediction errors).

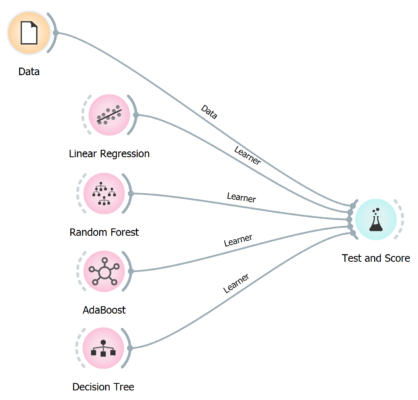

Orange 3

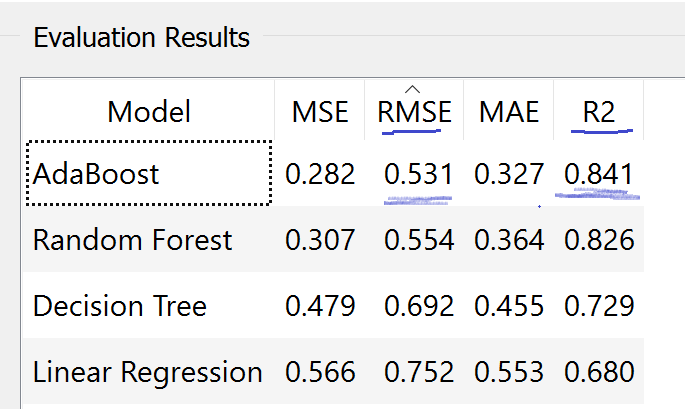

Now we do the same thing with the Orange 3 data mining tool. It’s an open source data visualization, data mining, and analysis toolkit. Where data mining and machine learning is done through visual interfaces.

Now we do the same thing with the Orange 3 data mining tool. It’s an open source data visualization, data mining, and analysis toolkit. Where data mining and machine learning is done through visual interfaces.

Selecting the best model

Comparing both the images above, we have found that in Jupyter, XGBoost algorithm gives us the minimum RMSE that is 0.562834 and maximum R2 which is 0.80377.

In Orange 3, AdaBoost algorithm gives minimum RMSE which is 0.531 and maximum R2 which is 0.841.

In two methods we have found two best algorithms that gives us two best results but now the question is which one we should take to make the model and implement it in the real life.

We would like to find out the difference between XGBoost and AdaBoost and which one has more advantages over others. Both of them are tree-based ensemble algorithms, using boosting methods. In boosting we combines the weak learner into a very accurate prediction algorithm. Where a single decision tree is a weak learning and while we combine multiple of those weak learners in to one we find Adaboost(Adaptive boosting ) algorithm.

When there is a noisy data Adaboost tends to overfitting, and while tuning the model to perform it better, it has few hyperparameter to tune to. But on the other hand, the algorithm is easy to understand and visualize. And the algorithm is not optimized for speed, that is why it is much slower than XGBoost.

Now let’s discuss XGBoost, this is also a tree-based ensemble learning algorithms uses gradient boosting to minimize error in sequential models. It optimizing capability does the parallel processing, tree-pruning, handles missing data and does regularization to avoid over-fitting. That’s why it performs so well and used for many competitions like Kaggle and by many others.

By taking consideration of above discussion and figures we decide to do the implementation using XGBoost algorithm. As it will be performed better than the AdaBoost in the production.

Implementation and deployment

There are many ways to the implementation and deployment of machine learning models that we have created. But we will use model pickle file using XGBoost algorithm in jupyter notebook. Also used streamlit library in python to make the application. It’s an open source app framework for machine learning and data science teams to develop apps rapidly with no time. For the deployment we have used streamlit shared service, which is free service, where steamlit app developers can easily host their app using github.

Explainable AI

Exaplianable AI(XAI), often refers to the method and techniques that AI or machine learning based applications result can be understandable and explainable by humans, meaning it should not be a black box.

The EU General Data Protection Regulation (GDPR), which came into effect from May 25, 2018. The article 22 of GDPR which is “Automated individual decision making, including profiling”, explains that whenever or wherever companies making decision about individuals using automated decision maker which is AI or ML systems, then that decision result has the right to reviewed by human for more explanation.

Although in our project data is not collected from individuals rather from machines, it’s a good practice to include explainable option in your ML application system for better interpretability.

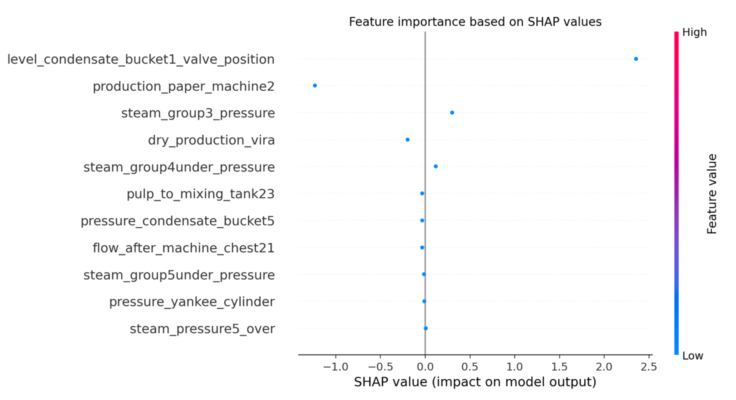

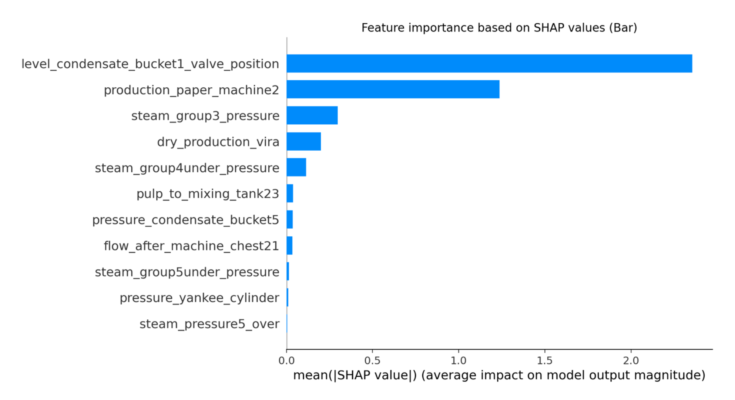

We will use SHAP(SHaply Additive exPlanations), which is game theoretic approach, originally developed by cooperative games in 1953, explains the output of the machine learning model. Although it was first translated in the machine learning domain by Štrumbelj and Kononenko in 2010 but Lundberg and Lee in 2017 made in more acceptable and later made python library for SHAP in 2019. We will use that library too explain why our XGBoost made the decision for the inputs. Also it worth mentioning that SHAP values are powerful tool to confidently interpreting tree models such as XGBoost.

Conclusion

We have tried to show the whole ML pipeline to predict the steamflow of a paper machine while making a paper roll. The model was based the small data we have collected(only ca 4000 rows and 12 columns). Although we have compared many algorithms and tools with tuning of hyperparameters, in future we would like to collect more and more data while creating the model.